All the system’s newly written or modified application programs—as well as new procedural manuals, new hardware, and all system interfaces—must be tested thoroughly. Haphazard, trial-and-error testing will not suffice. Testing is done throughout systems development, not just at the end. It is meant to turn up heretofore unknown problems, not to demonstrate the perfection of programs, manuals, or equipment.

Although testing is tedious, it is an essential series of steps that helps ensure the quality of the eventual system. It is far less disruptive to test beforehand than to have a poorly tested system fail after installation. Testing is accomplished on subsystems or program modules as work progresses. Testing is done on many different levels at various intervals. Before the system is put into production, all programs must be desk checked, checked with test data, and checked to see if the modules work together with one another as planned.

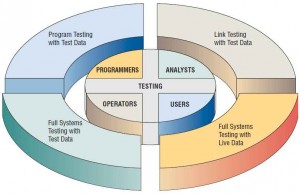

The system as a working whole must also be tested. Included here are testing the interfaces between subsystems, the correctness of output, and the usefulness and understandability of systems documentation and output. Programmers, analysts, operators, and users all play different roles in the various aspects of testing, as shown in the figure below. Testing of hardware is typically provided as a service by vendors of equipment, who will run their own tests on equipment when it is delivered onsite.

Much of the responsibility for program testing resides with the original author(s) of each program. The systems analyst serves as an advisor and coordinator for program testing. In this capacity, the analyst works to ensure that correct testing techniques are implemented by programmers but probably does not personally carry out this level of checking.

At this stage, programmers must first desk check their programs to verify the way the system will work. In desk checking, the programmer follows each step in the program on paper to check whether the routine works as it is written.

Next, programmers must create both valid and invalid test data. These data are then run to see if base routines work and also to catch errors. If output from main modules is satisfactory, you can add more test data so as to check other modules. Created test data should test possible minimum and maximum values as well as all possible variations in format and codes. File output from test data must be carefully verified. It should never be assumed that data contained in a file are correct just because a file was created and accessed.

Throughout this process, the systems analyst checks output for errors, advising the programmer of any needed corrections. The analyst will usually not recommend or create test data for program testing but might point out to the programmer omissions of data types to be added in later tests.

When programs pass desk checking and checking with test data, they must go through link testing, which is also referred to as string testing. Link testing checks to see if programs that are interdependent actually work together as planned.

The analyst creates special test data that cover a variety of processing situations for link testing. First, typical test data are processed to see if the system can handle normal transactions, those that would make up the bulk of its load. If the system works with normal transactions, variations are added, including invalid data used to ensure that the system can properly detect errors.

When link tests are satisfactorily concluded, the system as a complete entity must be tested. At this stage, operators and end users become actively involved in testing. Test data, created by the systems analysis team for the express purpose of testing system objectives, are used.

As can be expected, there are a number of factors to consider when systems testing with test data:

- Examining whether operators have adequate documentation in procedure manuals (hard copy or online) to afford correct and efficient operation.

- Checking whether procedure manuals are clear enough in communicating how data should be prepared for input.

- Ascertaining if work flows necessitated by the new or modified system actually “flow.”

- Determining if output is correct and whether users understand that this output is, in all likelihood, as it will look in its final form.

Remember to schedule adequate time for system testing. Unfortunately, this step often gets dropped if system installation is lagging behind the target date.

Systems testing includes reaffirming the quality standards for system performance that were set up when the initial system specifications were made. Everyone involved should once again agree on how to determine whether the system is doing what it is supposed to do. This step will include measures of error, timeliness, ease of use, proper ordering of transactions, acceptable down time, and understandable procedure manuals.

When systems tests using test data prove satisfactory, it is a good idea to try the new system with several passes on what is called live data, data that have been successfully processed through the existing system. This step allows an accurate comparison of the new system’s output with what you know to be correctly processed output, as well as a good idea for testing how actual data will be handled. Obviously, this step is not possible when creating entirely new outputs (for instance, output from an ecommerce transaction from a new corporate Web site). As with test data, only small amounts of live data are used in this kind of system testing.